A Bayesian system for noise-robust binaural sound localisation for humanoid robots

Published:

Kothig A., Ilievski M., Grasse L., Rea F., Tata M.

Published in IEEE International Symposium on Robotic and Sensors Environments ‘13. 2019 (ROSE)

Abstract

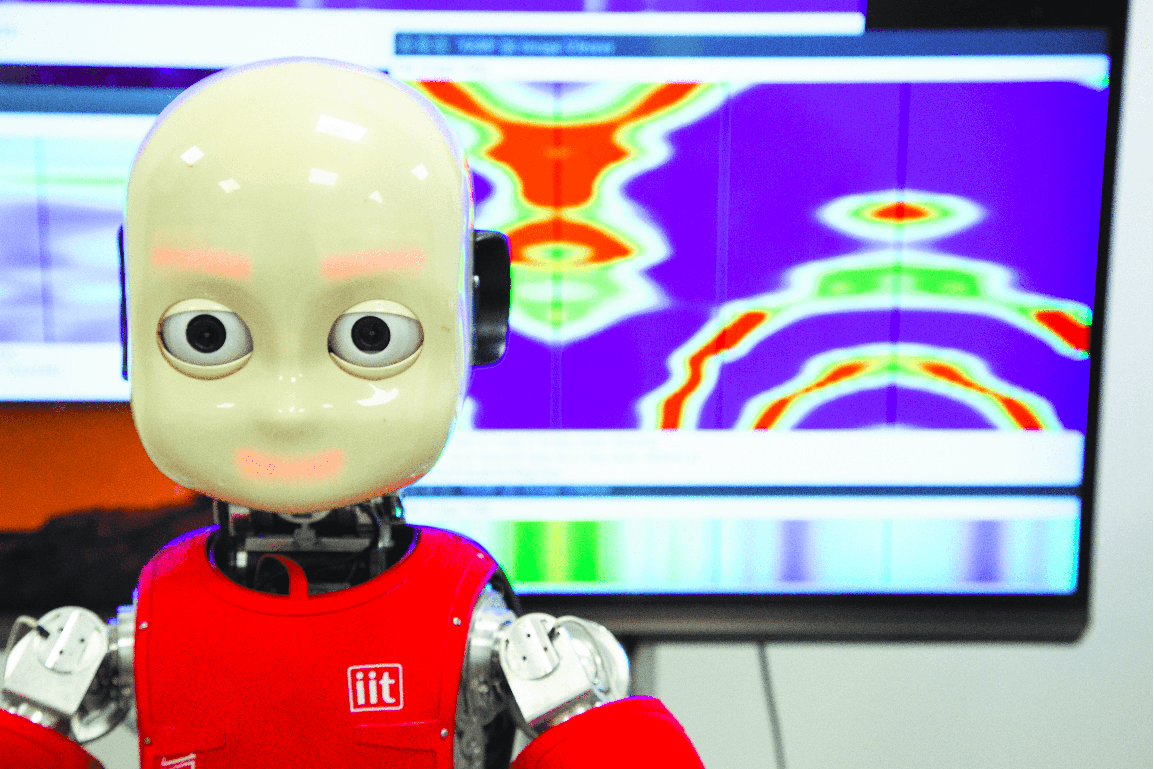

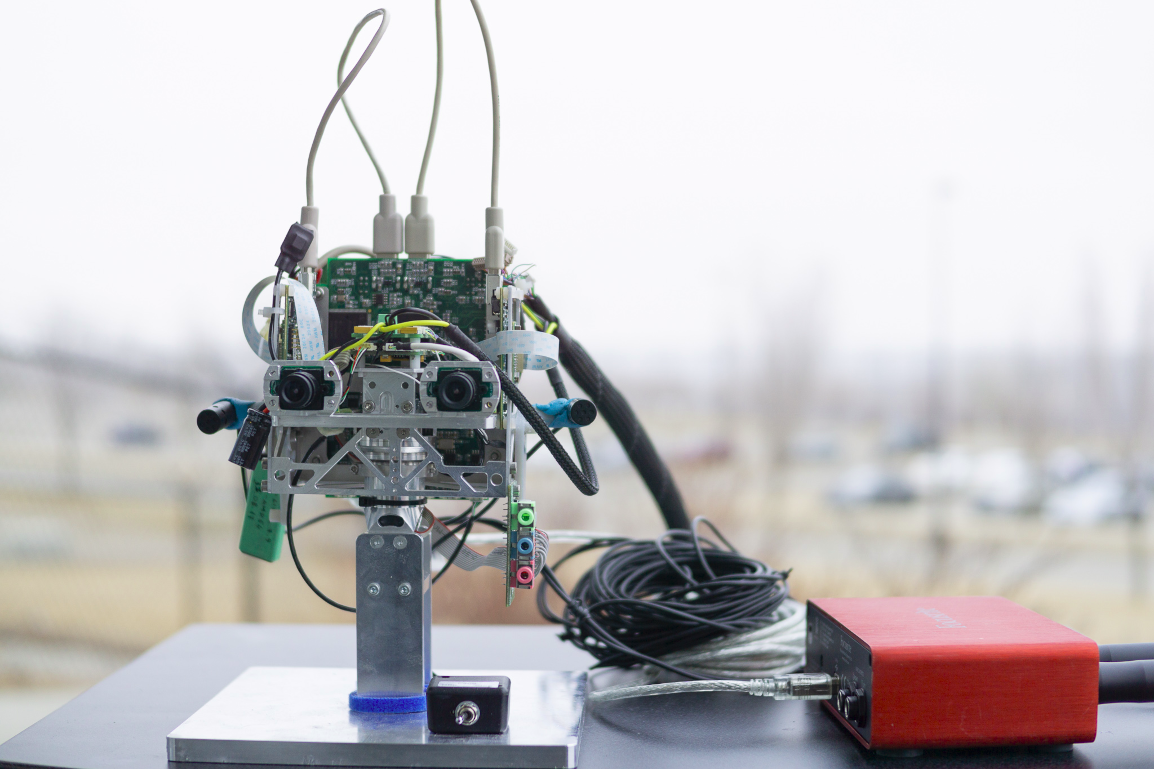

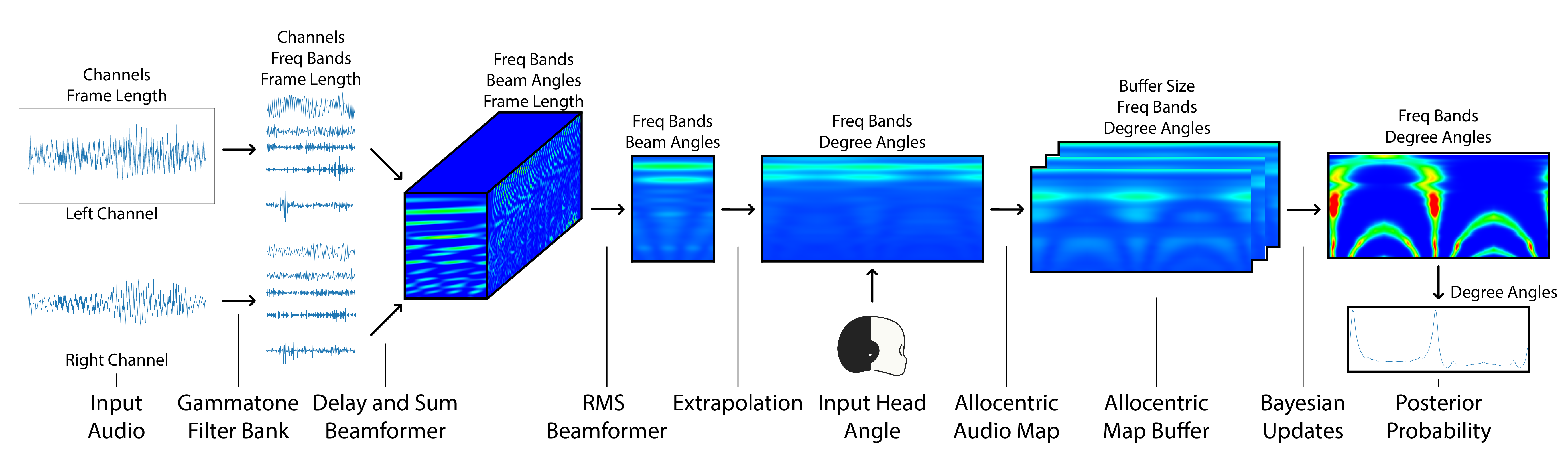

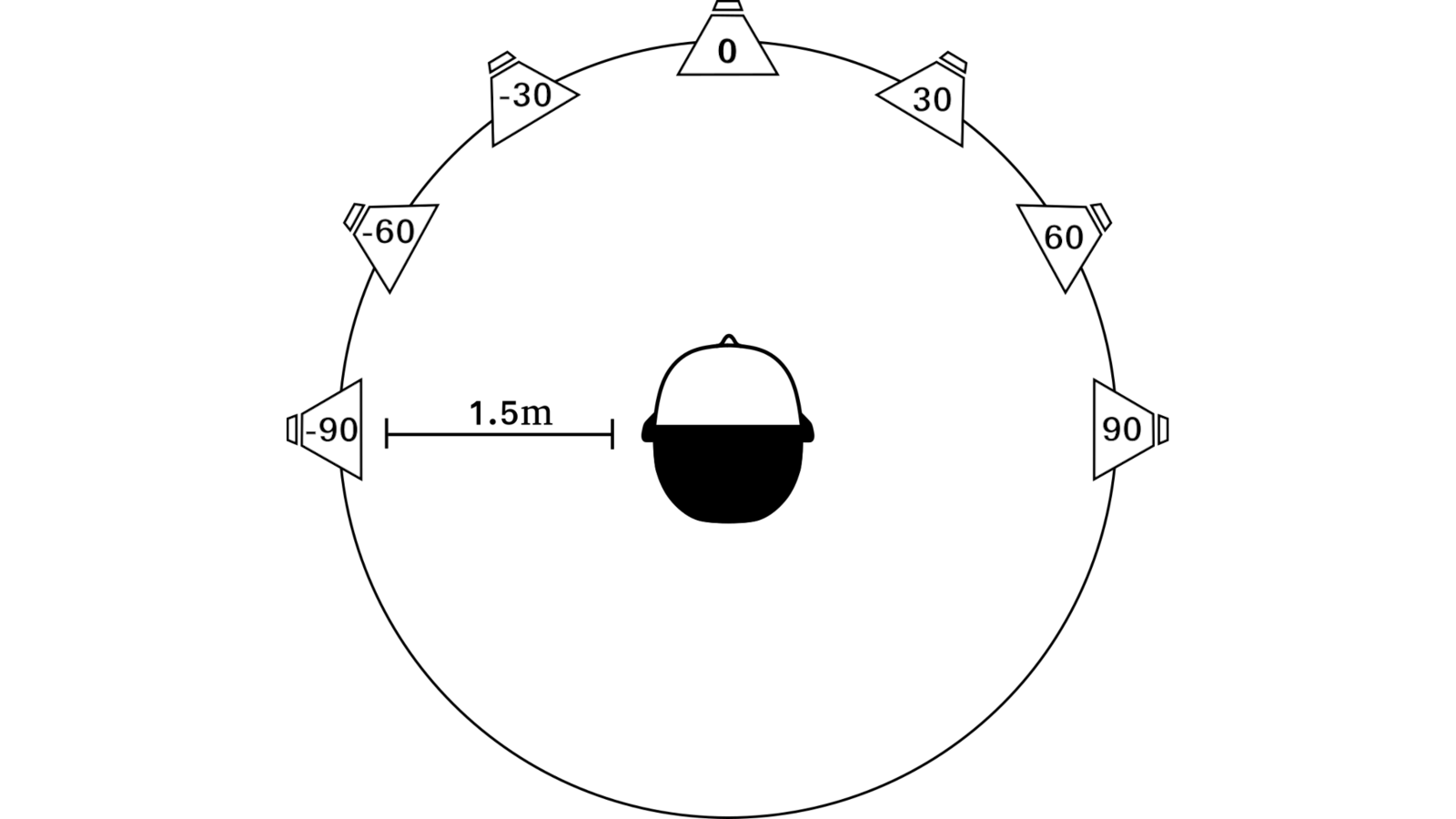

Humans make use of auditory cues to navigate and communicate in complex acoustic environments, but this remains out of reach for most binaural robots. This process remains computationally difficult due to multiple distinct acoustic events mixing together to create a single informationally dense audio stream, which needs to be decomposed. In this paper we introduce a Bayesian method of combining acoustic data from multiple head locations to help resolve ambiguities and better decompose the acoustic scene into individual sound sources. We go on to show that the method utilizing head movements performs significantly better than its static counterpart.

Images

How to cite?

@inproceedings{kothig2019bayesian,

title={A Bayesian System for Noise-Robust Binaural Sound Localisation for Humanoid Robots},

author={Kothig, Austin and Ilievski, Marko and Grasse, Lukas and Rea, Francesco and Tata, Matthew},

booktitle={2019 IEEE International Symposium on Robotic and Sensors Environments (ROSE)},

pages={1--7},

month={June},

year={2019},

organization={IEEE},

url={http://dx.doi.org/10.1109/ROSE.2019.8790411},

DOI={10.1109/rose.2019.8790411}

}