Connecting humans and robots using physiological signals – closing-the-loop in HRI

Published:

Kothig A., Muñoz J., Akgun SA., Aroyo AM., Dautenhahn K.

Published in IEEE International Conference on Robot and Human Interactive Communication ‘30. 2021 (RO-MAN)

Abstract

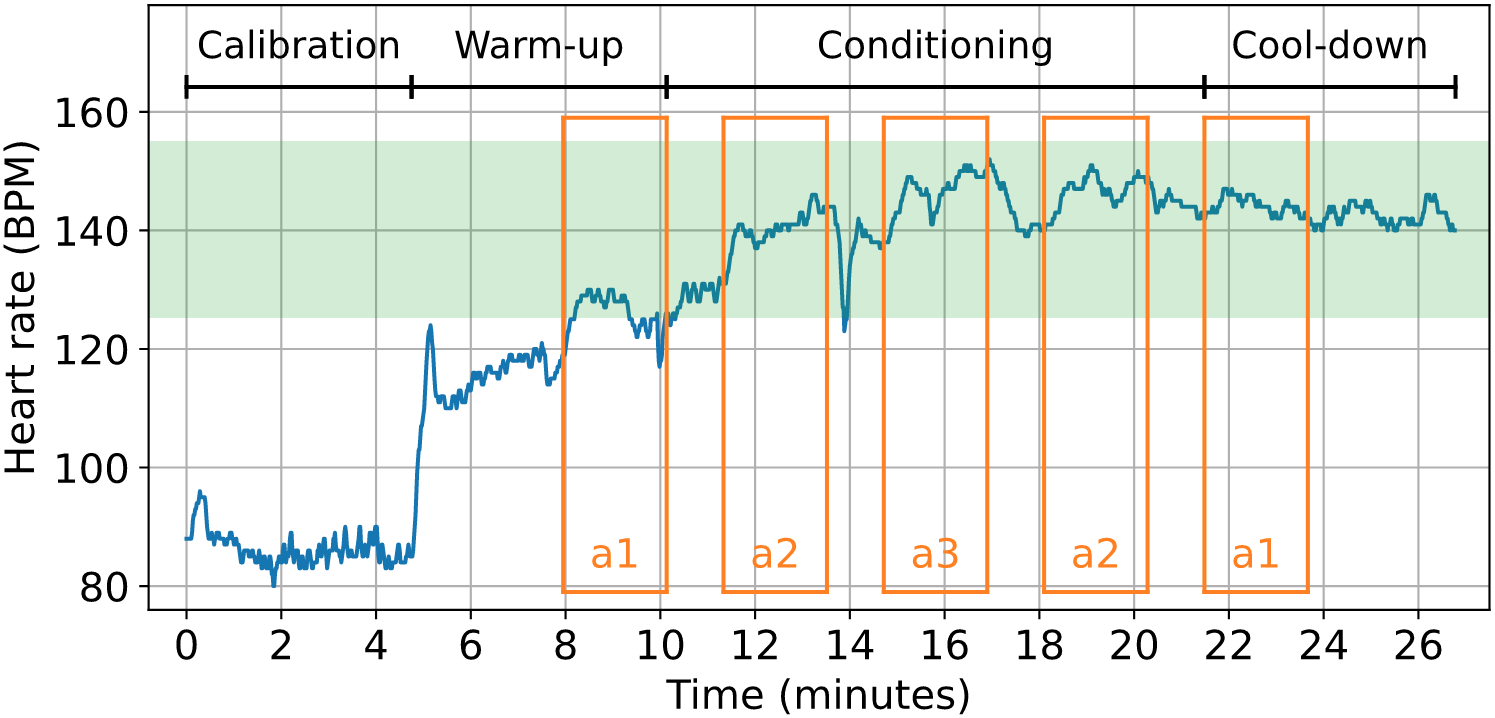

Technological advancements in creating and commercializing novel unobtrusive and wearable physiological sensors generate new opportunities to develop adaptive human-robot interaction (HRI) scenarios. Detecting complex human states such as engagement and stress when interacting with social agents could bring numerous advantages to create meaningful interactive experiences. Despite being widely used to explain human behaviors in post-interaction analysis with social agents, using bodily signals to create more adaptive and responsive systems remains an open challenge. This paper presents the development of an open-source, integrative, and modular library created to facilitate the design of physiologically adaptive HRI scenarios. The HRI Physio Lib streamlines the acquisition, analysis, and translation of human body signals to additional dimensions of perception in HRI applications using social robots. The software framework has four main components: signal acquisition, processing and analysis, social robot and communication, and scenario and adaptation. Information gathered from the sensors is synchronized and processed to allow designers to create adaptive systems that can respond to detected human states. This paper describes the library and presents a use case that uses a humanoid robot as a cardio-aware exercise coach that uses heartbeats to adapt the exercise intensity to maximize cardiovascular performance. The main challenges, lessons learned, scalability of the library, and implications of the physio-adaptive coach are discussed.

Images

How to cite?

@inproceedings{kothig2021connecting,

title={Connecting Humans and Robots Using Physiological Signals -- Closing-the-Loop in {HRI}},

author={Kothig, Austin and Mu{\~n}oz, John and Akgun, Sami Alperen and Aroyo, Alexander M. and Dautenhahn, Kerstin},

booktitle={2021 30th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)},

publisher={IEEE},

month={August},

year={2021},

url={http://dx.doi.org/10.1109/RO-MAN50785.2021.9515383},

DOI={10.1109/ro-man50785.2021.9515383}

}