Speech envelope dynamics for noise-robust auditory scene analysis in robotics

Published:

Rea F., Kothig A., Grasse L., Tata M.

Published in International Journal of Humanoid Robotics 2021 (IJHR)

Abstract

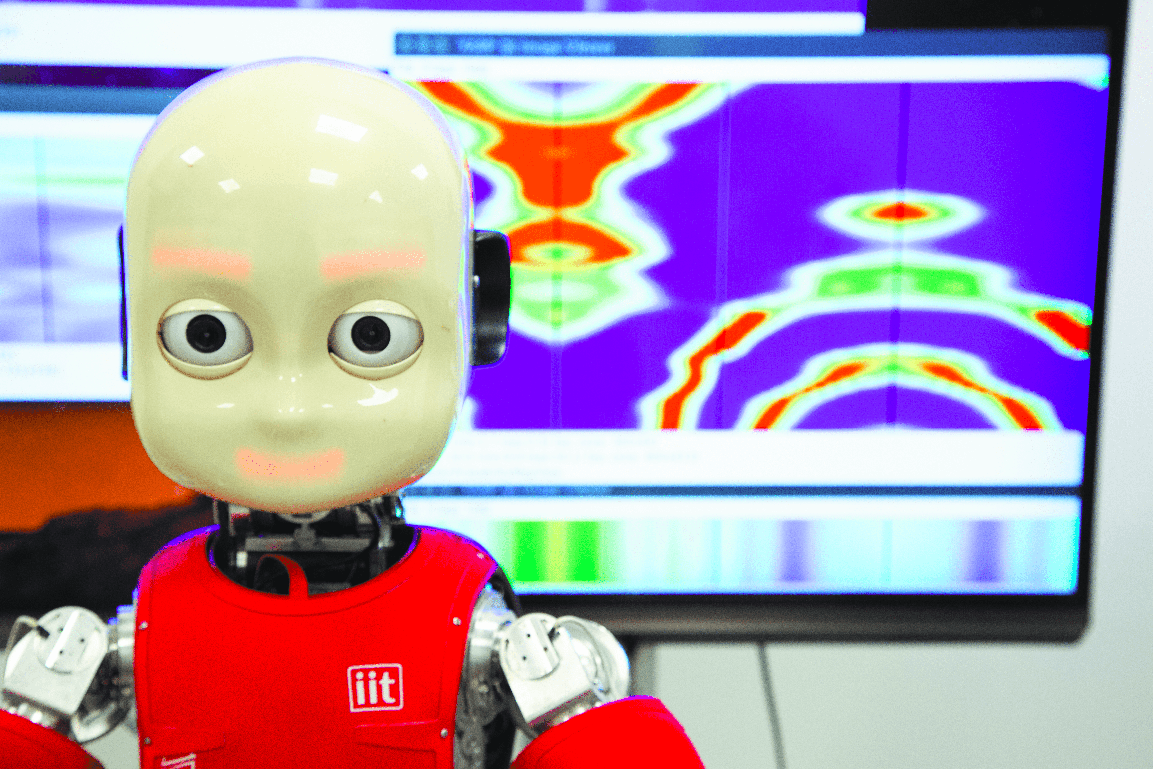

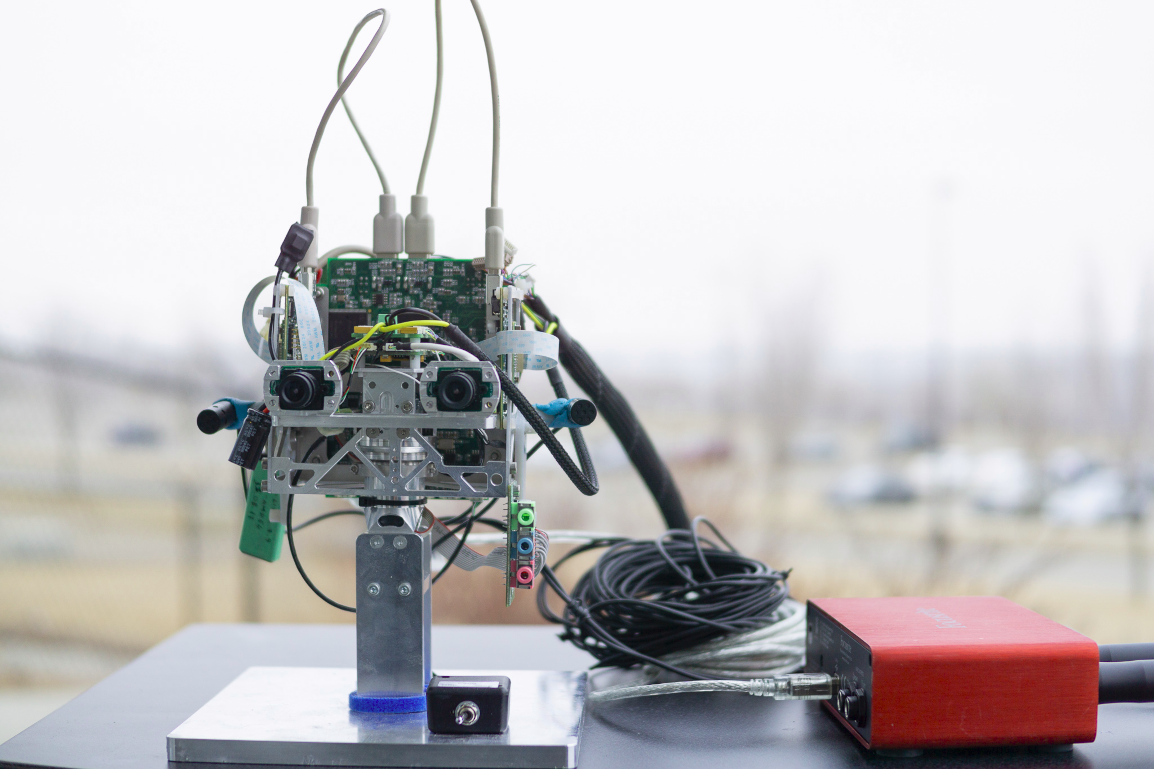

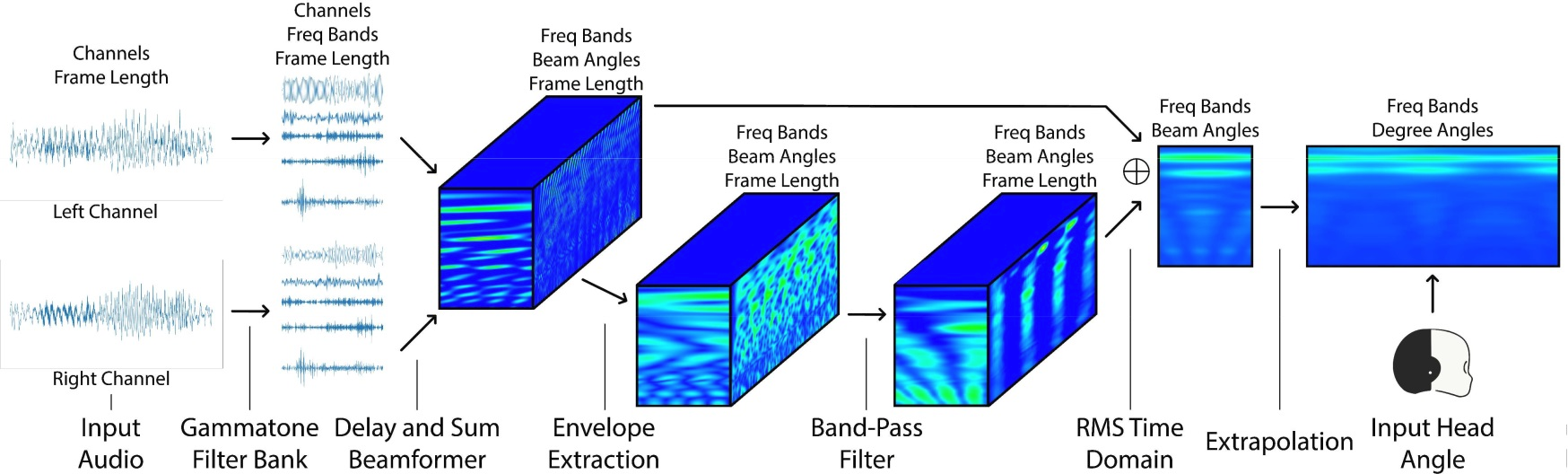

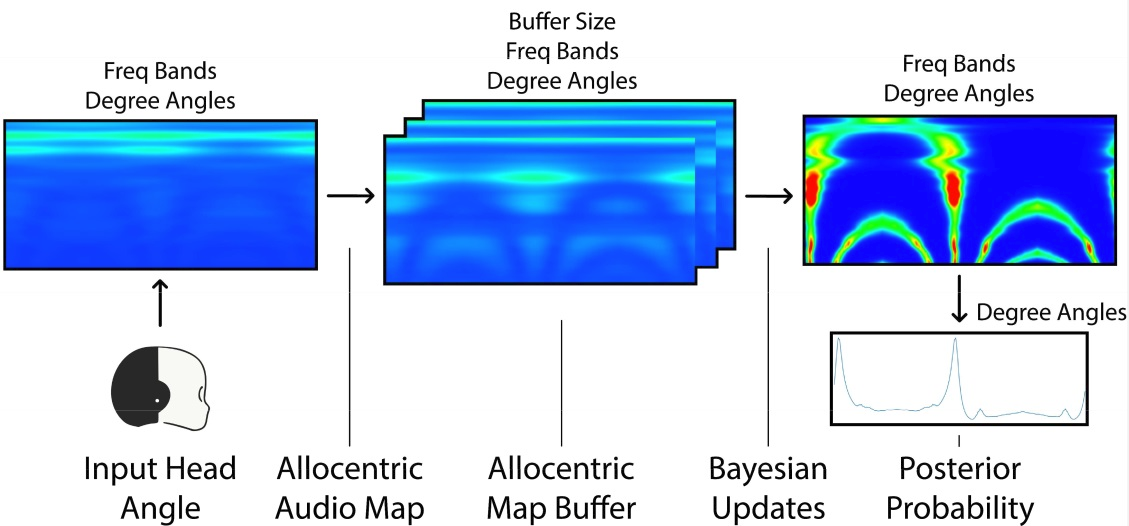

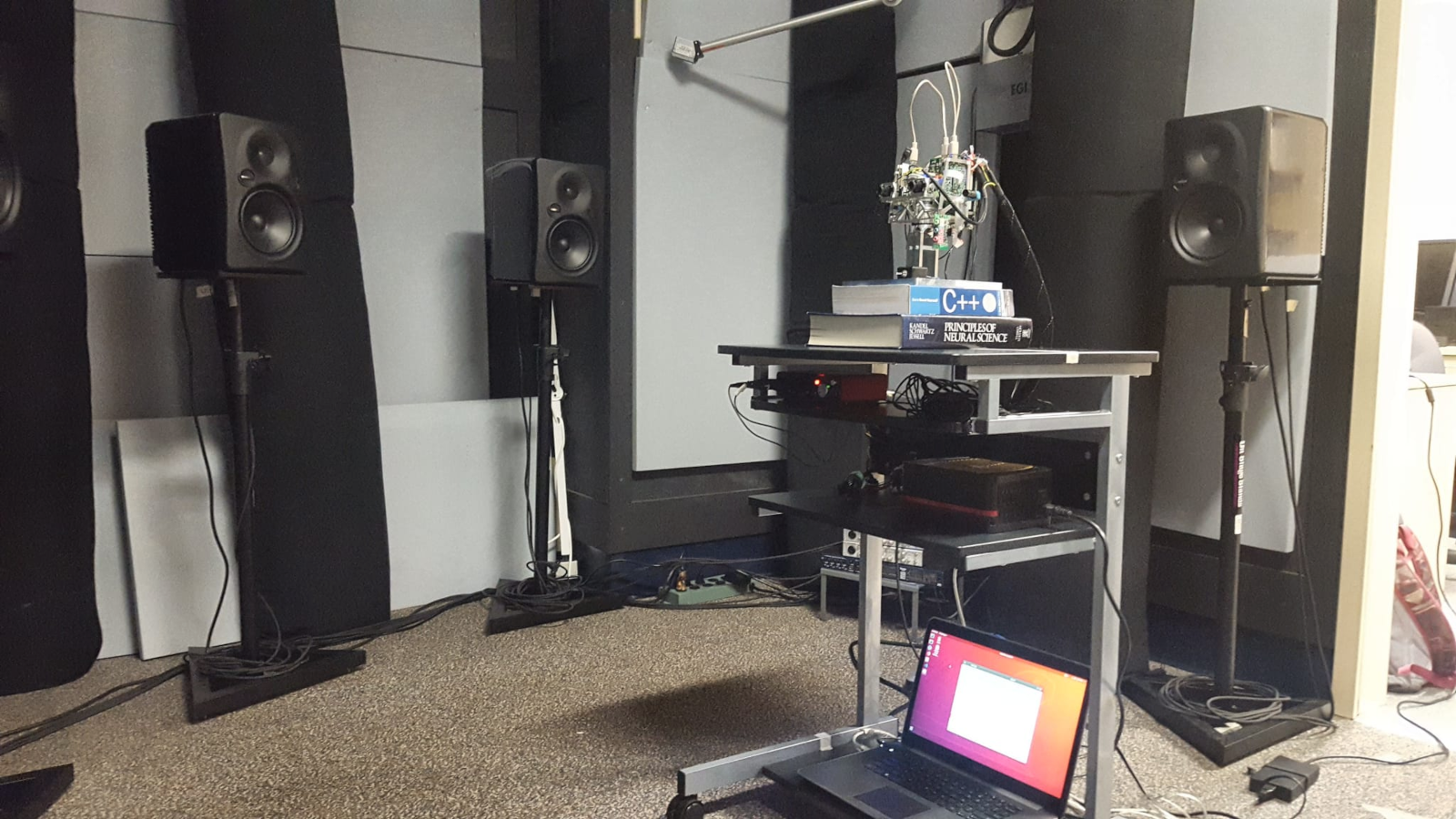

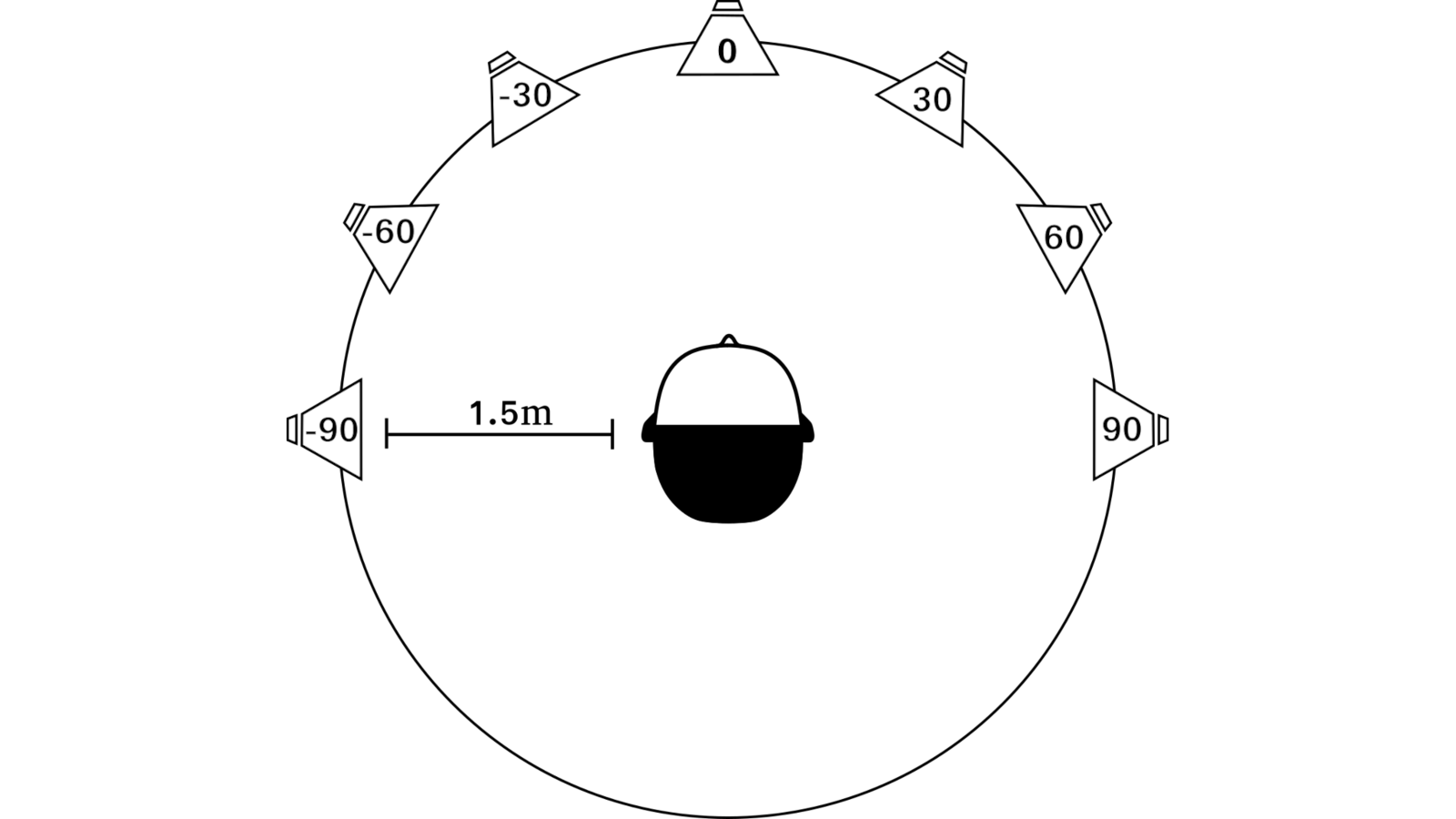

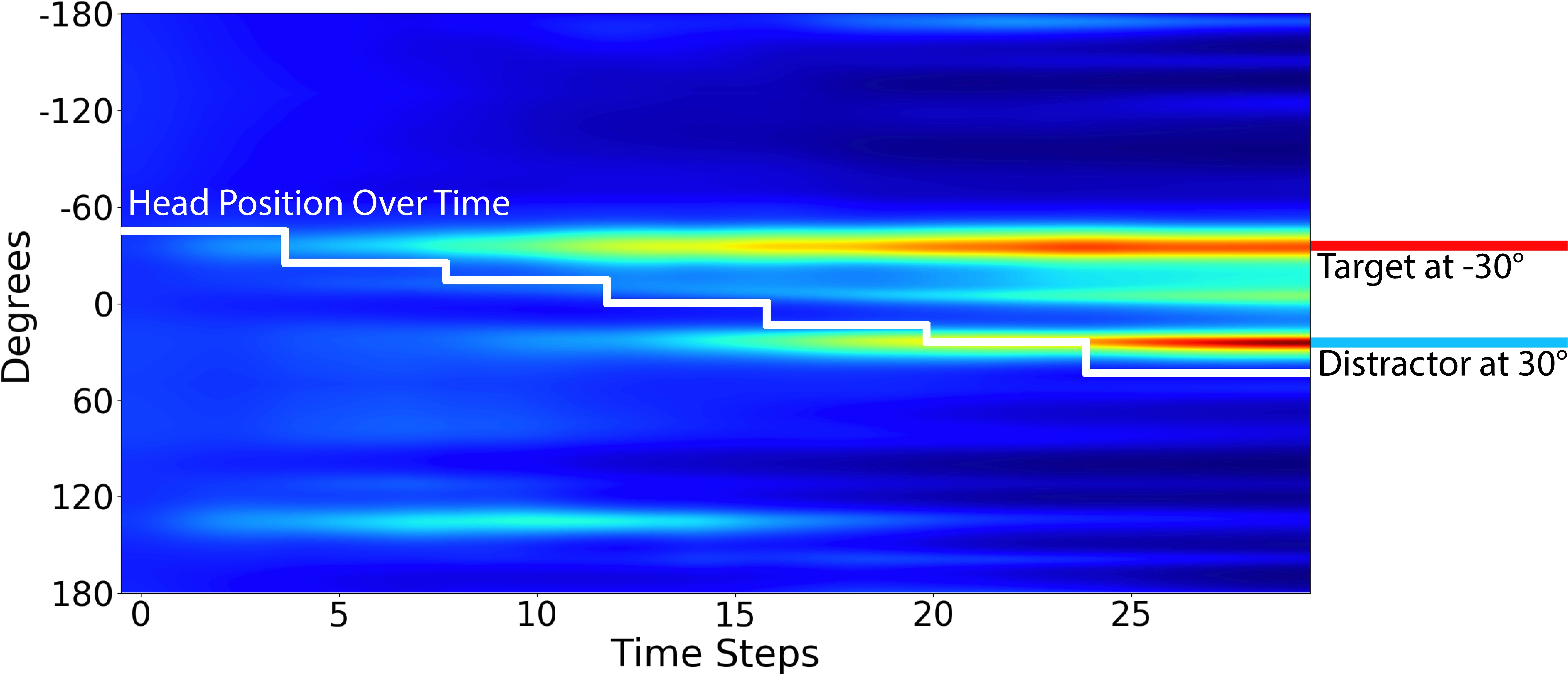

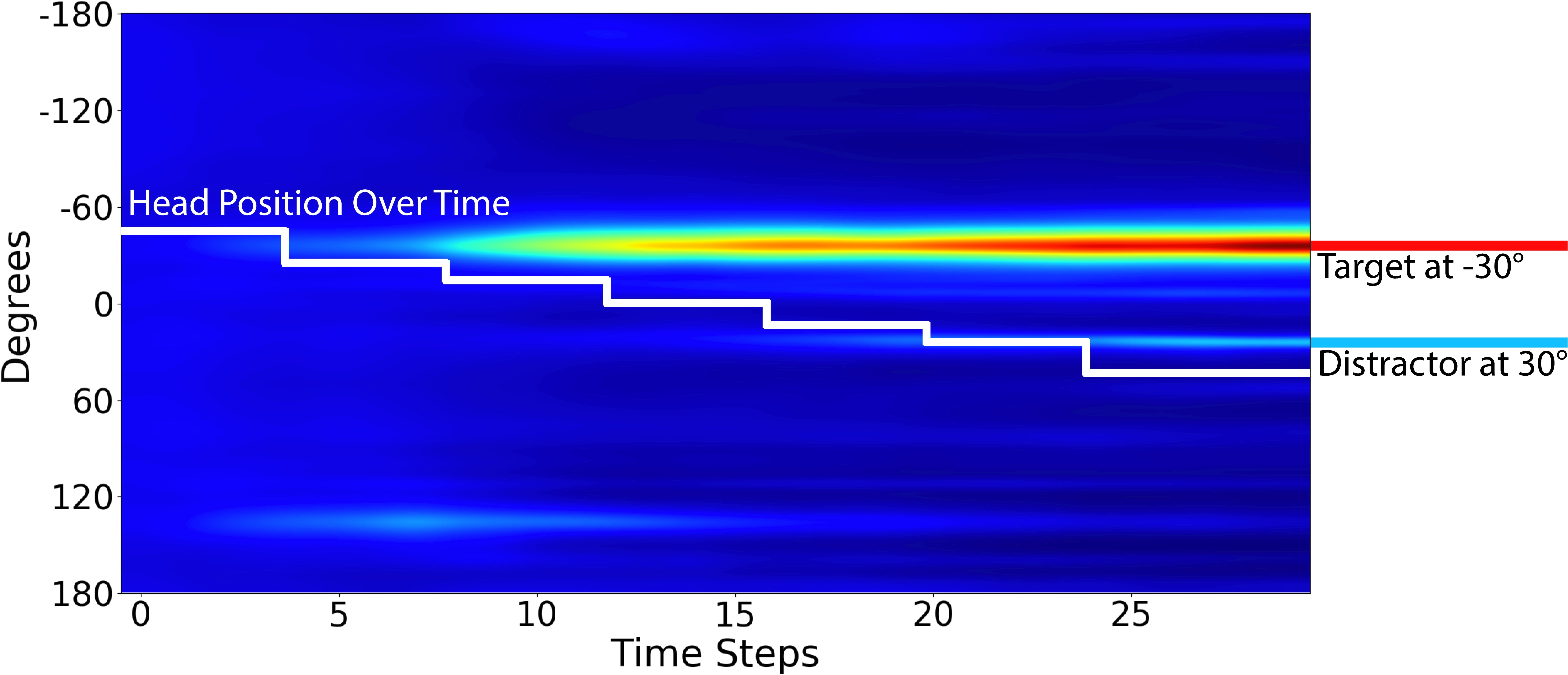

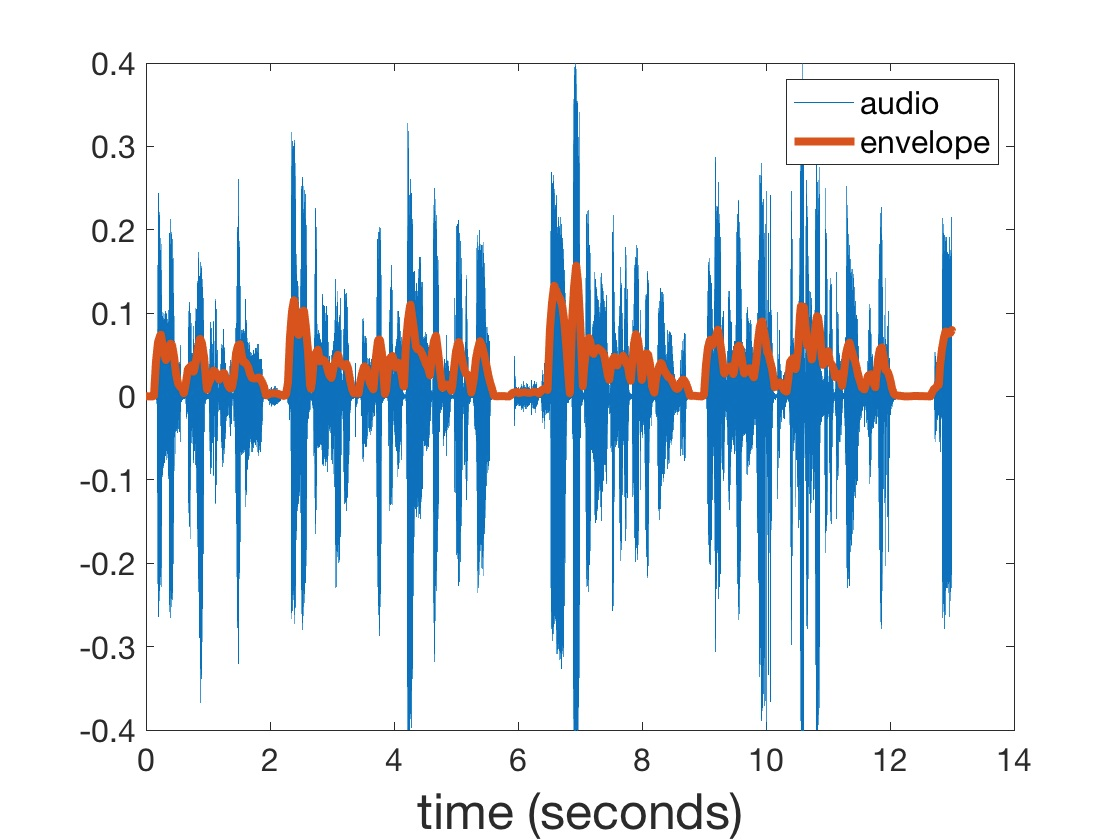

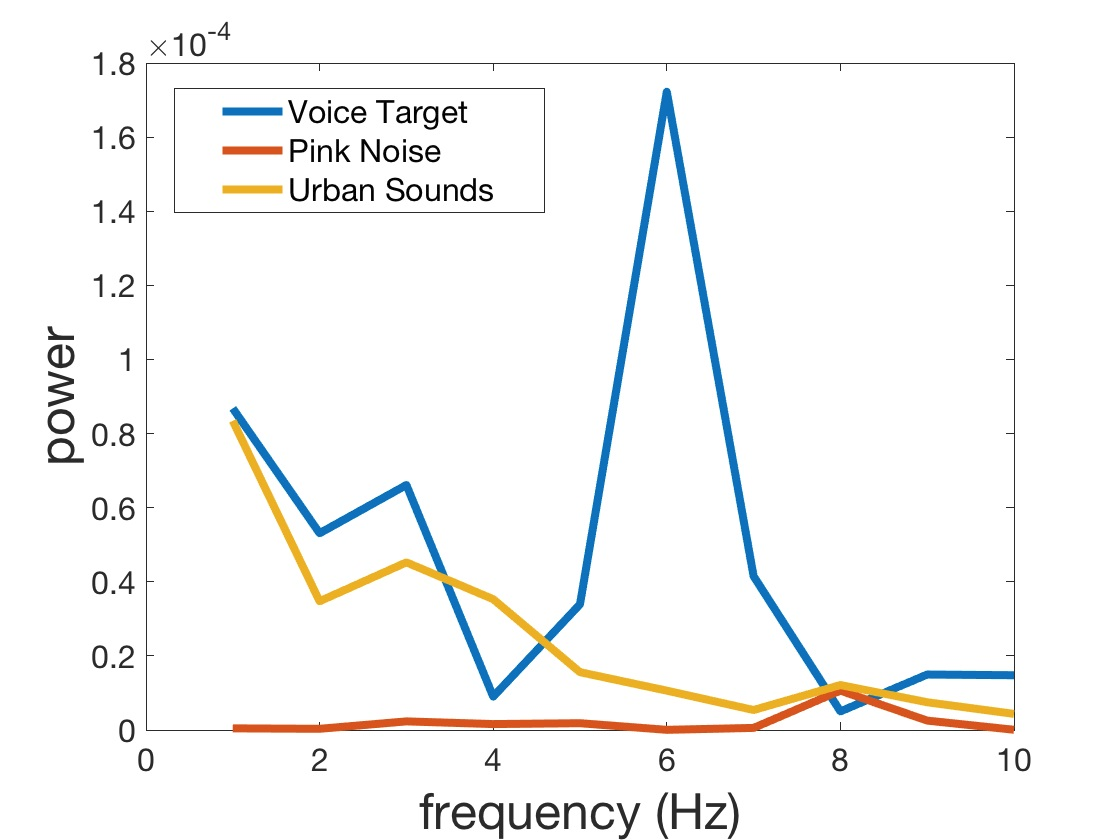

Humans make extensive use of auditory cues to interact with other humans, especially in challenging real-world acoustic environments. Multiple distinct acoustic events usually mix together in a complex auditory scene. The ability to separate and localize mixed sound in complex auditory scenes remains a demanding skill for binaural robots. In fact, binaural robots are required to disambiguate and interpret the environmental scene with only two sensors. At the same time, robots that interact with humans should be able to gain insights about the speakers in the environment, such as how many speakers are present and where they are located. For this reason, the speech signal is distinctly important among auditory stimuli commonly found in human-centered acoustic environments. In this paper, we propose a Bayesian method of selectively processing acoustic data that exploits the characteristic amplitude envelope dynamics of human speech to infer the location of speakers in the complex auditory scene. The goal was to demonstrate the effectiveness of this speech-specific temporal dynamics approach. Further, we measure how effective this method is in comparison with more traditional methods based on amplitude detection only.

Images

How to cite?

@article{rea2021speech,

title={Speech envelope dynamics for noise-robust auditory scene analysis in robotics},

author={Rea, Francesco and Kothig, Austin and Grasse, Lukas and Tata, Matthew},

journal={International Journal of Humanoid Robotics},

volume={17},

number={06},

pages={2050023},

month={January},

year={2021},

publisher={World Scientific},

url={http://dx.doi.org/10.1142/S0219843620500231},

DOI={10.1142/s0219843620500231}

}